3D reconstruction

This is an assignment from CMU 16720-A. In this assignment, we are going to use eight point algorithm to find the Fundamental Matrix (F) from two images. With this F, we can generate the Essential Matrix E if we have the intrinsic camera matrix K1 and K2 for both cameras. Then, we use SVD to find the extrinsic matrix. With both intrinsic and extrinsic matrix, we now have the camera matrix which could be used to do the triangulation. Until now, we have already been able to construct a 3D model from two images. Finally, we will use RANSAC and bundle adjustment to make the result better.

Eight point algorithm

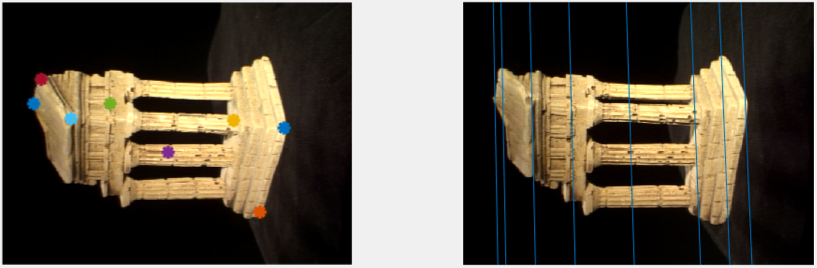

Since the F matrix is a 3×3 matrix, there are 9 parameters with an arbitrary scale factor. In this case, we need eight correspondences to generate eight equations to find the solution. However, here we “at least” need eight correspondences. If we can have more, it is better. So, to implement this algorithm, we first collect correspondences from two images, and then we scale those positions into the range 0~1. Then, we use SVD to find a 3×3 matrix F. Then, we “unscale” the F. Below is a demo that we use this F to find the epipolar line corresponding to those points we pick.

As you can see, the correspondences (right image) correspond to the points (left image) are all on the epipolar lines, which means our F is good.

Triangulation

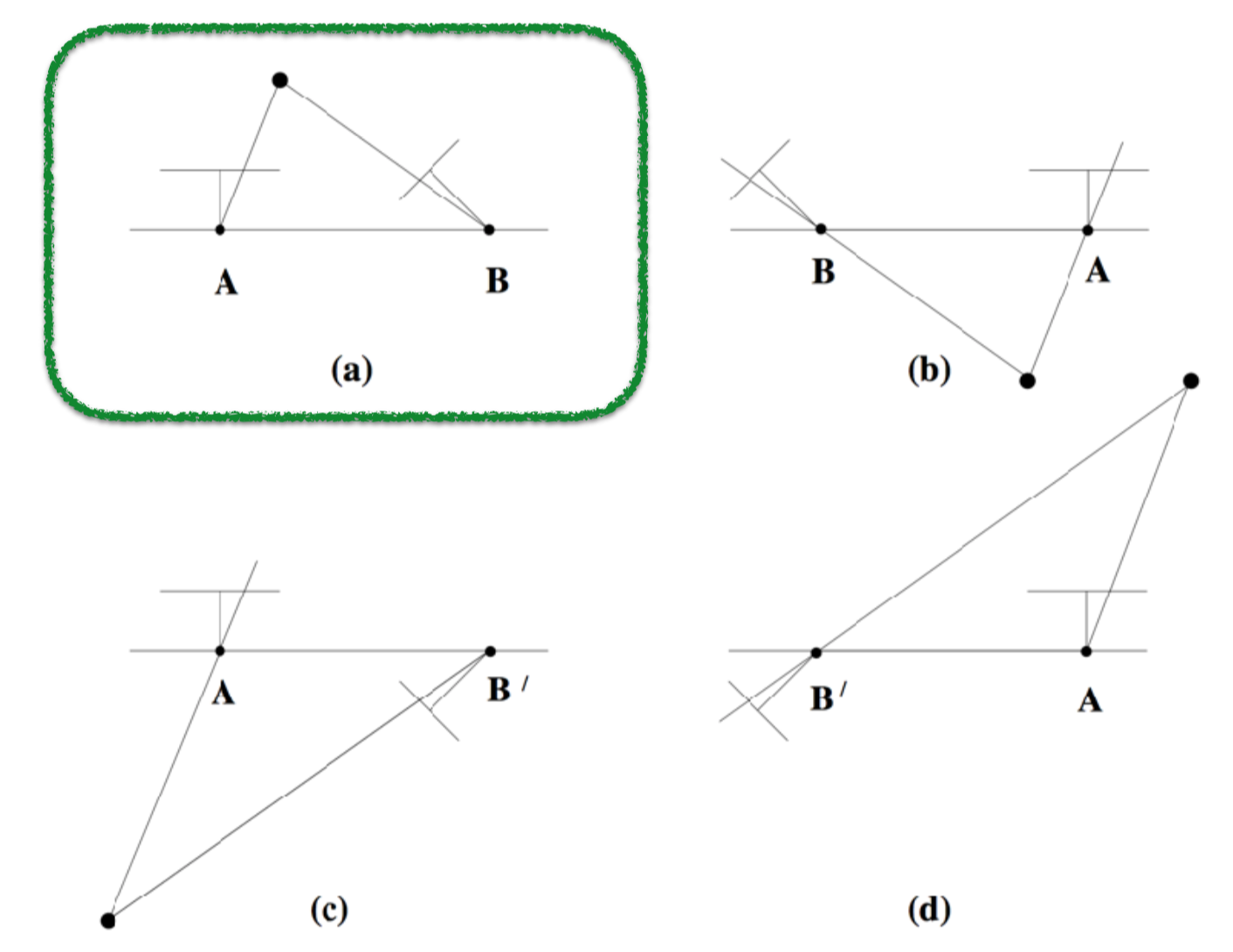

Since we already have the F matrix, and we are also given the camera intrinsic matrix K1 and K2, we can find the essential matrix E. By using this E, we can find 4 possible extrinsic matrix M2s if we fix our M1 to be [I,0]. We can feed those M2s into triangulation and see if the 3D points make sense or not. We want to find the M2 which generate points in front of both cameras.

Reference: CMU 16720-A slides

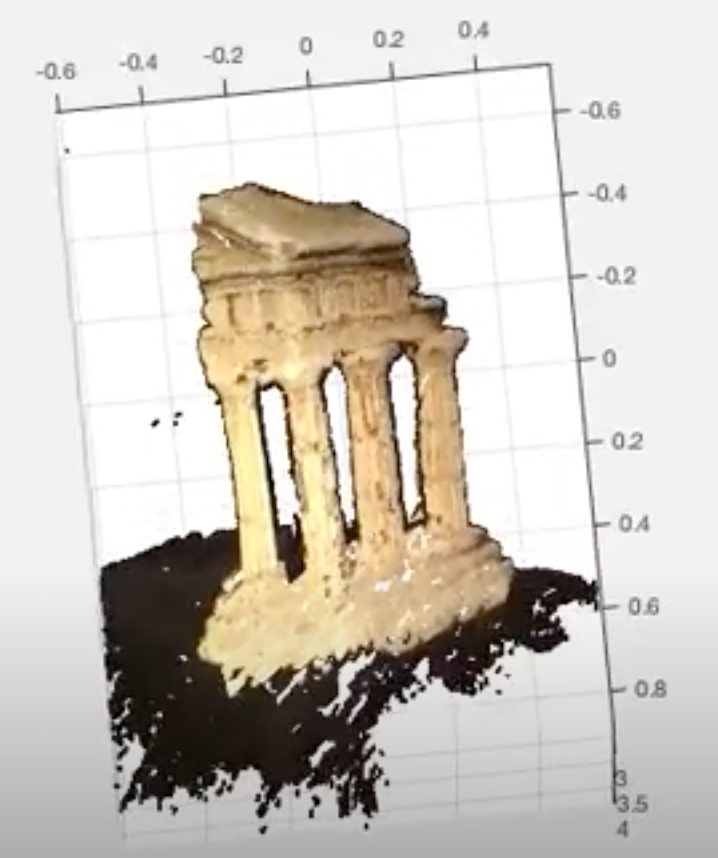

Now, we can use the two positions in a correspondence and their camera matrixes, with SVD again, to get the 3D coordinate. Below is the example of the point cloud of the temple images we show above.

I also compute every x,y image pair in the image and do the same triangulation to build the dense point cloud.

Bundle adjustment

In the previous example, those correspondences used for eight point algorithm and triangulation are given by this course. However, in the real world applications, the correspondences found by the matching algorithm are usually noisy. Thus, we need to use RANSAC to find inliers and use those inliers to do the eight point algorithm again. Next, for those camera matrixes and 3D cloud points, we actually use SVD to find the least square error in a linear function. Based on these parameters and 3D points, we can actually do gradient descent to achieve even smaller error by using a non-linear function. This is what bundle adjustment does. In the bundle adjustment, we want to minimize the total error with regard to the extrinsic camera matrix and all 3D points. To do this, we define our error function as the offset between the ground truth (correspondences) and the 2D positions mapped back from 3D points. Then, we can use this error function with the lsqnonlin function in Matlab to do the gradient descent. Since we already have a roughly working result, we ensure that we start near a local minimum and then we move toward it.

Extra credit

In the extra credit, we want to use more images (6) to build our 3D points, without knowing the extrinsic camera matrix. To do this, I first use eight point algorithm again on first two images, and then I find the M2 for camera2. Next, since we have some common features in image2 and image3 and since those features have been mapped to 3D points by triangulation, I can use pose estimation to get M3 (with 3D points and SVD). I keep doing this until I finish finding M6. Then, I do a bundle adjustment on all those parameters to get a better result. But, since I don’t know the real world scale, the result was not very good.